There are two reasons why there shouldn’t be an editor tab.

The first is that if behavior can be simplified enough to show in a tab

then that simplicity is unable to represent some of the complex tactics that the player or another species could have discovered. Take the helicopter build of 0.5.6 for example.

A lot of cilia can be added to a cell and rotation can be used to move the spikes, instead of moving the whole body forward. The aggressiveness slider doesn’t consider how the attack happens, so it might think that it can’t be done when it can be. If aware stage ends up being like spore (attacks such as bite, charge, spit), then the aggressiveness slider doesn’t consider at which order they are used either.

But if we don’t try to simplify the behavior into an editor tab and let the AI do anything it wants to do, then some great behaviors can evolve. For example in aware stage, you can meet a pack of velociraptors that do a spit attack followed by a feigned retreat and a double envelopment.

And I admit that I want to see an AI finding a way to break the game’s physics engine*

It is possible to try to come up with every possible behavior and represent it in a behavior editor, but that would take the time and effort of the developers which could have been used in developing other parts of the game and also the resulting tab would be overly complicated and the majority of the players wouldn’t use that.

The second reason is the mismatch between the behavior of the one cell the player controls and the millions of other cells in the players species. Ask yourself this question:

Why are we even playing this game?

The purpose of the game, as hhyyrylainen said, is to play as an intelligent designer and beat evolution. But if we could already say that primium thrivium is responsive and opportunistic, the time we spend outside of the editor becomes useless. Everything about the species is already defined. We could just play the observation mode instead, and see how our species is doing. That could work, but it would be more like The Bibbites or The Sapling, it wouldn’t be Thrive.

The game should observe how the player plays, and copy that style to the other members of the players species.

Otherwise, we run into a problem. Auto evo needs to find out how successful the players species is, and the success depends on the behavior, but we have two different behaviors. The behavior of the player, and the behavior the player assigns to the species.

Which behavior is used to measure the player’s species’s success? There is an attempt of reconciling these two ways.

But why keep having two different behaviors for the same species and try to average them out? Behavior may not be a big priority like the design of the organisms body, but a body is nothing without behavior, you can add as many spikes as you want but it is useless if you can’t hit a target. The average of the successes of two different behaviors is the success of a behavior that doesn’t exist. It is a known exploit that you can lower the resource seeking of your species so that they won’t deplete the compound clouds on your screen, which should be only beneficial to one cell but it still ends up helping the players species.

Here is a bug that results from the attempt of reconciliation

Never dipping below the population of 50 suggests that the real population should be way higher, but the player is unable to use the behavior editor as good as s/he plays.

It is evident in the case of the helicopter build how big the mismatch can be

You might be asking, but how can a behavior be inferred from the way the player plays? To that I would say, evolution. An evolutionary algorithm is used by other species to find the perfect behavior for their environment and an evolutionary algorythm is used to find the behavior that is most likely to make the same movements and actions as the player. It doesn’t try to survive, it survives only if the player survives in the same situations. And with that, the mismatch dissapears.

I don’t think the player should be able to enter the editor after surviving for one generation. There are many exceptions to that measure of success, with one generation you can’t evolve childcare, have r/k selection, experience both the good and the bad times of a Lotka–Volterra cycle [1]and have multigenerational migrations later in the aware stage.

I think we should only be able to enter the editor when the AI completely understands how we play, and if that behavior makes our species survive. (If the species is badly designed, then no behavior can result in survival)

The accusations of being lamarckian

You can skip to the next chapter for the algorithm

Oliveriver said

I don’t agree with the interpretation that the gameplay determining the behavior is similar to events in life determining genetics, that it is a necessary evil or a “maybe we can do that” evil. A new behavior can appear in one generation (for a species with a brain) but I don’t mean that either.

We change the species in the editor and the other species change by themselves but the game stops every 100 million years and test if those changes are good, otherwise there wouldn’t be selection. When we exit the editor, the cells are supposed to already have a behavior. But that behavior is being tested right now, it wasn’t tested in the previous 100 million years, just like how the physical changes weren’t tested.

The behavior could have been designed in the editor too, but I’ve stated my dislike for its coexistence with a gameplay that isn’t an observation mode.

Here is my own interpretation. The gameplay is an editor too. In the normal editor, we design the species and during the gameplay we design its behavior. And instead of saying that I am aggressive towards everyone, I am able to specify the strategies I use against every species I prey on.

- Cell design/physical evolution=> tries to increase traits (speed, dps, etc)

- Gameplay/behavioral evolution=> tries to survive

When I first enter the game, the neural networks aren’t trained but when I am exiting, I fully designed my creature and only then the behavior represents the behavior of my species at that time during its evolution.

I just noticed that since the evolution of other species in the planet already happens in the background, the training of neural networks would require an additional computational power. It might necessitate wait screens. I came up with a solution for that but it can’t be done right now.

Since evolution only happens in the first 3 stages, if people play Thrive online, the people in cell stage can simulate the evolution in their games in the computers of the people playing society stage. That way, every stage would be just a little slow instead of crashes in cell stage and a super smooth space stage.

Storing and changing behavior

I don’t know whether Thrive uses logic gates, finite state machines or neural networks for its AI’s. I don’t know about programming other than python, I just watched a few videos in youtube about neural networks. I will use this section to give a suggestion as to how behavior mutations can happen. I know that Thim wanted to get behavior from the players playstyle but he later gave up, so you can use this for inspiration if it is a good proposal.

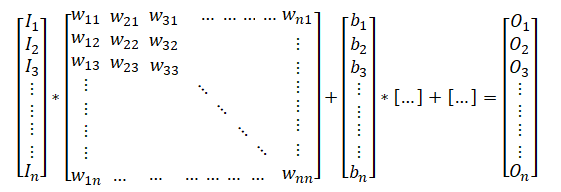

This is how I think neural networks work

Inputs: The distance to another cell, the angle to that cell, the distance and angle to the compound cloud, time passed since birth, the amount of light, hunger level, health, signaling agents, etc.

Outputs: The direction the cell decides to go, the speed it wants to go, where the oxytoxy or mucilage is thrown, whether the cell divides or waits or enters dormancy, signaling agents, etc.

Ignore the fact that the weight matrix should have been on the left of the input matrix.

The neural network takes a bunch of inputs, does a vector multiplication with the weights, adds the biases and gives us the outputs. Multiplying and adding happens a few times and after each time an activation function is applied*

The matrixes in the middle part represent the behavior. For any input, the same arithmetic operations are made and an output is obtained. If the middle part changes, the behavior style changes.

How to approximate the behavior of the player? Let’s say that the player goes towards the ammonia cloud when the cell is low on ammonia. Take a behavior, make a few copies of it and randomly change some numbers in all of them except one. Give all of them the same inputs and look at their outputs. Take the one that did something closest to moving towards the ammonia cloud, delete the others, mutate the survivor, and look at the outputs of the second generation. Repeat this until the behavior obtained is similar enough to the players behavior.

The first matrixes we start with can be full of random numbers, it would be good enough eventually, but it would likely start from a non random behavior, I will come to that later. Comparing the output of the behavior to the players behavior requires a reward function. The movements can be compared by looking at how similar the numbers for speed and direction are. For comparing something like shooting oxytoxy, the directions can be compared the same way, and the output for deciding to shoot would be number too, shooting happens if the number becomes larger than a value. Getting that number above the threshold close to the time when the player would have pressed the shooting button could be rewarded.

The other AI’s would be evolved not to copy something, but to survive in an environment where other species and the player’s species exists.

Isn’t that what already happens in Thrive?

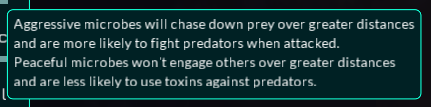

Mutating the values of the six sliders is similar to mutating the numbers in the behavior matrixes. And every combination of those sliders make sense, unlike the matrixes, where random numbers would truly result in random behaviors, or spinning in circles as the creator said*. But sliders, as I said, are restrictive. Take a look at the peaceful/aggressive slider for example.

It isn’t possible for the behavior of “not chasing down prey over long distances but being likely to fight predators when attacked” to evolve, even if it was very useful. Not restricting behavior to certain categories, although harder to accomplish in some ways, would enrich the Thrive experience, at least for me it very much would.

Behavior modes

A large enough neural network can have very complex behaviors, it can hunt, graze and plan for the future at the same time. But it should be broken down to several different smaller neural networks, which neural network is controlling the cell right now depending on the environmental conditions.

The reason is given in the developer forums

It is because going towards a compound cloud shouldn’t be the one of the considerations of a cell that is being chased by a predator. They then go on to suggest 6 different behavior modes*, but I think just two is enough.

-

Fight or flight mode: This mode is activated whenever you either see your prey or your predator.

-

Grazing mode: This mode is active whenever the fight or flight mode is off.

I have a second reason why there should be different behavior modes. A species needs to have a different fight/flight response to different predators/preys. But the species are changing. Consider the following scenario. Species A preys on species B. Later, species A speciates into species C and D.

Species B knows nothing about species C and D. But it knows how species A behaved. So it should copy the fight or flight behavior it had for the species A and use it for species C and D. And it should start tweaking that matrix into the matrixes for C and D.

Every species should have a different fight/flight mode for every species they interact with because the number and types of such species can change and I don’t think a single AI can handle that. These don’t have to be very different. They can have a general behavior for “escape from predator” and only modify it slightly for each predator. Alternatively, only different types of predators may receive different fight/flight modes. For example, a mouse may escape from a snake by climbing a tree and from an eagle by digging down. How to find if two predators are different enough? At first, the same matrix can be trained to survive against all predators. If it is observed that training on one predator lowers the success against another one, then that means that it is time to have two different matrixes.

If two species don’t have a predator prey relationship, they can be in grazing mode when next to each other. In cases of cannibalism, a species can enter fight/flight mode if it sees the a member of the same species.

Size

Lets look at the amount of data that would be stored for the behaviors in a thrive game. Here is the calculation for another behavior system

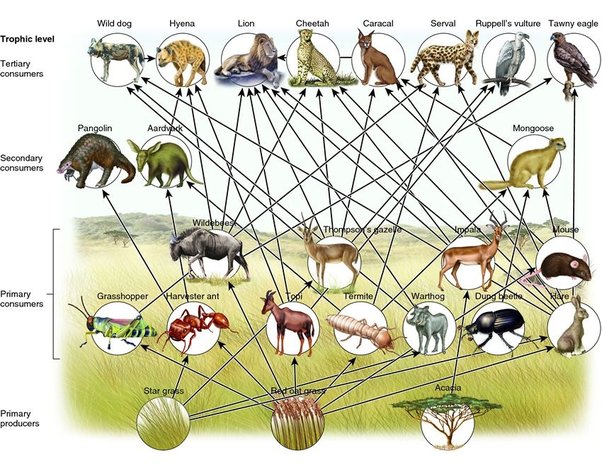

It seems like the early developers wanted to have a thousand species. I will go with a smaller number, like 30, for a single patch. I don’t know how many numbers a neural network would need to store, I will assume that it is 1000. Not every species preys on one another[2], so n^2 fight or flight modes aren’t needed. Based on this picture which may or may not be realistic[3]

Lets say that a species has three fight or flight behaviors on average, and a grazing behavior. So the result is 1000 x 30 x 4 or 120 kilobytes. If we instead say that there are 60 species, each with 10 behaviors and 10.000 numbers per neural network, the number becomes 6 megabytes, closer to the estimate for the other behavior system.

Another change is that the behaviors don’t just change if two species fights on screen. Their fights are simulated in the background. But mini simulations aren’t as costly as normal simulation.

What to train on and what to train for

The auto evo needs a few data. In nickthenick’s auto evo algorythm, which I haven’t read all of, the cells are approximated as moving randomly and just like the collisions of molecules, they come into close contact and then separate. Each encounter is either a successful or an unsuccessful hunt for the predator (if those two species have a predator prey relationship)

The field of vision is greater in aware stage than the cell stage. I want to focus on how the results of the encounters are found.

For that, mini simulations can be used. In rainworld, all the creatures are still simulated when they are off screen but they aren’t rendered so they don’t use too much computing power. I am not saying a very larger area should be simulated, I am saying that only the events of importance should be simulated, which are the encounters.

How much every species bumps into one another is calculated from the formula. How much hp, glucose, mucilage etc. they have before the encounters (the average) are also calculated. Then all of these events are simulated. Based on these simulation results, how much hp(or they may die), glucose, mucilage they have after the encounters are found and they are fed back to the formula.

Each simulation is done from the point of view of one species[4]. Its behavior is mutated into multiple versions and a few simulations are run at the same time. The behavior in the simulation with the most favorable result is kept and the other behaviors are deleted. In the next set of simulations, the other species will have mutations and try to find a defense to the behavior the other species evolved[5]

Which encounters should be simulated more often? The more common ones? Not really. Two species can have friendly relations but what matters to a species’ survival is the deadly encounters.

For every species, the simulations should be run proportional to how badly they are effected. For example, a species may be able to escape a predator every time, but it might run out of mucilage and be unable to hunt its own prey on average, and starve. That species would try to survive with more mucilage from its encounters with its predator, and some hp loss would be tolerable. Its predator wouldn’t be simulated often to evolve counter behaviors because it can’t catch the mucilage species anyway and it isn’t a major food source for it. But if the mucilage species starts dying, things can change.

What they need to improve in order to survive can be found in one of the formulas in nickthenick’s autoevo algorithm. And for example, a species may try to decrease its fatalities by reducing the encounters, with a mutation in the grazing mode, in later stages.

A species that is unable to increase its survival rate with a change in behavior can be declared extinct. It happens if other species’s are more successful in filling the niches it could have filled, or if there is an ecosystem collapse due to an extinction event.

The events happening in the screen of the player could also be the considered mini simulations. It was suggested that those should be the only things changing how different species interact with each other, but I don’t think it would be enough to properly simulate extinction.

The player’s species is trained on the player’s behaviors, not the other species (the other species are trained to some extent on the player’s behaviors but more so on the player’s modeled behavior in the background).

When to train

The neural networks are constantly trained in the background. When it becomes apparent when a species wouldn’t go extinct, its normal evolution for the next generation can also start.

The player’s species is recorded when an encounter with another species happens. Recordings can also be made during normal gameplay for the grazing mode. The recordings for encounters are used to train the neural network to get a modeled behavior of the player. The encounters don’t have to happen often, but the same recordings are constantly used for training.

The case of multiple opponents

hhyrylainen said

It is hard if one tries to write conditional statements for every case, but an AI can be trained in all situations if it can get training data for them.

First of all, let’s look at a case of more than two species fighting each other. I will get to the player later.

Species A: Has slime jets

Species B: A more bulky build

Species C: Prey

Species C has two different behaviors. When it sees species A coming at it, it moves sideways. When Species B approaches it, it moves directly away from it. What would it do if both of them approach it?

I think it should only interact with the one closest to it and ignore the one far away. Because if it has, lets say 3 predators, and try to develop a behavior to defend against every combination of them, it would need 7 behaviors, not 3, which takes a lot more computation and time to train.

But the priority should be on the predator that would reach it first, not necessarily the one that is closest. So it normally runs away from B, but once A shoots its slime, it would reach C first if C was not moving, so C switches to the behavior for defending against A and ignores B for the moment. But maybe B is on the right side, so C should move to the left to avoid A, not to the right. So maybe it should see the location of B, just not know the details that it is B.

The inputs for the fight or flight mode against a species

- A: main enemy

- B: any enemy that isn’t the same species as the main enemy

- C: the prey

- O: an object like an iron chunk or a herbivore cell

- CL: a mucilage or poison cloud (no need to know about compound clouds in fight/flight mode)

1: The direction of A

2: The distance of A divided by the speed towards C

3: The direction of the second A

4: The distance of A2 divided by its speed towards C (the time value in 4 is more than 2)

(there can be more A’s, I am just skipping)

5: The direction of B

6: The distance of B divided by the speed towards C (6 is more than 2, otherwise the behavior for B would be activated)

7: The direction of B2

8: The distance of B2 divided by the speed towards C (8 is more than 6)

9-16: The speed and directions of O’s and CL’s

Maybe a instead of finding the time it takes to reach the C, the input should be one over time, that way if there are less than the maximum number of predators/objects, the value entered can be zero, which means that it is infinitely far away. The number multiplying with the next number in the matrix would be zero. But that still leaves out the direction. Maybe radial coordinates weren’t a good idea. I thought that the players could more upwards rather than downwards in cell stage, have preferences like that, but it would be unnecessary to tech that to its species, so every cell in the species could have a random number added to its radial coordinates so that it would average out. Also the direction towards the prey doesn’t make sense outside of radial coordinates. Anyway.

These are the inputs the cells receive, and they evolve their behavior. If it turns out that the majority of the fatalities are caused when 4 of the predator species with mucilage and spikes attacks, than those encounters are simulated more and the species tries to develop a counter behavior.

Multiple species attacking doesn’t get its own simulation, the training in single species attacks are used in such cases.

But yes, two species can have a symbiosis in behavior, such as the humans and dolphins that hunt fishes together or the coyotes and badgers. In those cases, I think it can be like spore, the symbiosis being recognized by the game, the team members shown in the right part of the screen if player is the one doing that. Their preys would develop a counter behavior against both of them, but that doesn’t increase the number of behaviors the prey needs, it is decreased by one, unless the predators hunt by themselves too.

What about the player’s species? When a player is attacking a prey or being attacked by a predator (when its fight or flight mode would have been active), the event is recorded and added to the databank. The players behavior matrix tries to recreate the things the player does and evolves. The recorded event could be an encounter with 2 of the predators of a certain species, or with any number of them. But the other species are asked to evolve against their deadliest encounters, while the encounters of the player are random, can this be changed? I will get to this later. The recordings can be deleted after the same type of recordings are recorded multiple times, so if the player starts playing in a different way his/er species changes the behavior too after some time.

Not starting from scratch

The training doesn’t have to start with random matrixes. There can be pre trained neural networks. A developer can run the game in their computers before every update, and see the different behaviors that appeared, a predator, a prey, a tank build, chemosynthesiser, etc. The most common of these can be copied and added to every thrive game, similar to how planets will be pre generated.

When two species interact for the first time in a mini simulation, they can try out all the behaviors that are added to the game and choose the one that is useful to them the most. After that, they can slowly tweak those behaviors and make it perfect for the particular environment they are in.

Choosing a preexisting behavior can be done after that too. Lets say that I am a new player who doesn’t know how to play the game well, and a species hunts me. I then stop the game and look at the thrive wiki, and see that oxytoxy was intended for shooting. So I start the shoot at my predator and start to kill it before it reaches me. In this case, the predator doesn’t have to slowly change the hunting behavior into the running away behavior. It can chose a preexisting prey behavior and start surviving.

The other species can also choose the player’s modeled behavior, or any other species’s behavior. Player’s behaviors would exist in the species that speciated from him/er.

The species should try other behaviors every so often while they are evolving their behaviors.

You might be asking, how is it different than just having sliders? They were premade behaviors too, without the final little tweakings. But there is another fundamental difference. The behaviors in the sliders had to be designed by the developers, but these behaviors appeared in games without preexisting behaviors and don’t carry any mistakes or generalizations that a human programmer would make. But they may stuck in local maximums instead.

We report the glitches to the community forums. I don’t know if the game can automatically send reports. That can exist for behaviors. If a new behavior appears on a Thrive player’s game that is very dissimilar to the preexisting behaviors (either the players behavior or something the AI discovered) it can be reported and it would be added to the next update so that it takes less time to create that behavior. And if it was using a glitch, the glitch can be removed. They would also help to model the players behavior faster.

After evolving, the species can keep their previous behaviors towards each other and build onto them, or switch to any other behavior at any point.

dying at a time when the population should be decreasing isn’t that bad, but the information of how that happens may be useful to the auto evo ↩︎

things like parasitism, filter feeding and herbivory don’t require a fight or flight mode ↩︎

google image result for “realistic food web” ↩︎

I don’t know how both of them could try to maximize their success at the same time ↩︎

by evolved I refer to the way the new behavior is created in the game. in the game’s lore, the behavior should have been appeared long before, alongside the bodily mutations, not while the bodies are constant ↩︎