First of all: I can always appreciate a well thought-out model and it is obvious that you put quite a bit of effort into this. Keep up the good work, and don’t be discouraged by me nitpicking about little problems in terms of gameplay or biology. After all, long-term motivation might very well be the most important aspect of one’s mindset, when contributing to the development of a game as ambitious as Thrive.

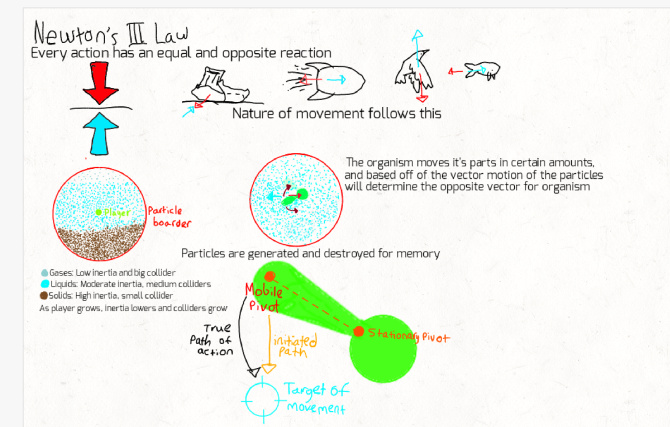

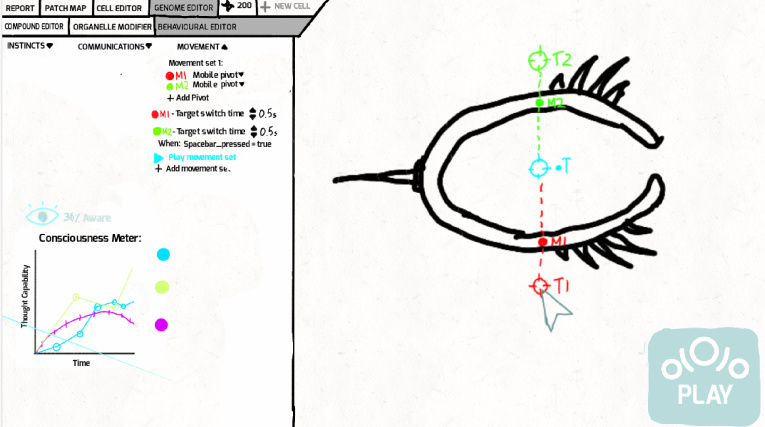

Despite this, I am not entirely happy with this. Intuitive as this system might be, I fear it wouldn’t scale well. By that I mean that it might be an elegant solution for early multicellular live and even primitive aware creatures, with simple body plans and a lack of precise, coordinated movements. But as soon as you involve actual specialized limbs into the equation, it could become a mess quite quickly. Let’s model a human arm with this model, shall we? We will notice right o the bat, that it has a ton of pivots (upper, lower arm, hand, every part of each finger…) which only allow certain types of movements against each other (upper arm can’t rotate against lower arm etc.). Finetuning even the basics of this, might be tedious, but with grouping of pivots together and good UI, this should not be too big of a problem. Instead, my main concerns are these:

Most limbs can perform very different movement sets depending of the situation. An Arm can grasp with his hand, punch, grab and pull or push something, perform gestures for intraspecific communication etc. We would have to set a hotkey for each of these movement sets, resulting in half of the keyboard being taken up by just the different options of a single limb. Then why don’t we just hotkey the corresponding movement sets of multiple limbs together? After all, no need to control both legs separately when running, right? But this leaves us with another problem: Relative position of the body to his environment. After all, a meticulously designed bipedal walking movement set may work perfectly on flat ground, but if we introduce even a slight slope the creature would probably topple over, since it performs the same movements but with it’s center of gravity shifted. Again, this might work out for the simple movements of multicellular organisms floating in a tidepool, but for complex terrestrial organisms it would probably not be enough. For example, to grasp something, you have to coordinate your arm in a way that your fingers are in the correct position to actually hold the object when they close, but always moving hands and arms separately would be tough for the player. How about moving sideways or jumping, which would be highly dependent on the surface? What about climbing, where precise grasping motions and balance (something the game will probably never be able to handle realistically by itself, since it is just so complex) are key?

So, in short, I don’t see this model in its current form working well for the aware stage, despite looking really good for the multicellular stage. Although I don’t think switching the movement system up completely between the stages would be a good idea, so we will probably need to work on a good base, that can be easily expanded to make it intuitive to learn for the player as he progresses towards a more complex anatomy and different environments.